Taeyoun KimI am a research assistant at the AIRe Lab at Carnegie Mellon University advised by Aviral Kumar. I previously graduated with an MS in Machine Learning also at CMU where I was advised by Aditi Raghunathan and funded by the Kwanjeong Foundation. I am interested in reasoning for open domains, self-improvement, RL, safety, and OOD robustness. I aim to understand how to utilize test-time compute in open domains that are difficult to verify with large answer spaces such as long-form math, scientific discovery, and safety. Before CMU, I earned my Bachelor's degree in Electrical & Electronic Engineering from Yonsei University. My undergraduate studies was funded through the National Science and Technology Scholarship of South Korea. CV / Google Scholar / GitHub / LinkedIn / Email |

|

PublicationsMy recent work is on understanding how reasoning helps AI safety improve through reinforcement learning. |

|

Reasoning as an Adaptive Defense for SafetyTaeyoun Kim, Fahim Tajwar, Aditi Raghunathan, Aviral Kumar NeurIPS, 2025 arxiv / blog / code / model / We create an online RL training recipe to make LLMs reason for safety, consisting of 3 key ingredients. (1) Lightweight SFT for diversity, (2) Mixing in harmless prompts, and (3) Separating the reward system. Models trained with TARS beat larger open-weight models on the safety-refusal frontier and existing defenses such as circuit breakers. They also adaptively allocate test-time compute with longer reasoning on ambiguous prompts and learns better internal representations of harmful and harmless prompts. |

|

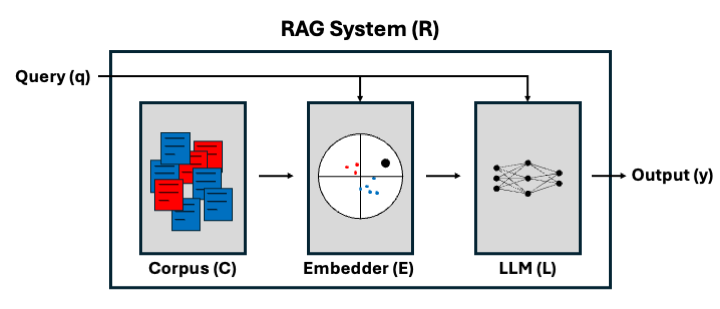

Mitigating Bias in RAG: Controlling the EmbedderTaeyoun Kim, Jacob Springer, Aditi Raghunathan, Maarten Sap ACL Findings, 2025 arxiv / code / We decompose a RAG system into three components: the LLM, the embedder, and the corpus. Each component can introduce bias its own bias in the RAG system accumulating complex bias. We find that it is possible to mitigate bias just by reverse biasing the embedder. Furthermore, we empirically find a linear relationship between the embedder’s bias and RAG system’s bias which has varying sensitivity for different LLMs. We investigate the three different methods of fine-tuning, projecting, and stochastic ranking to mitigate bias and fine that fine-tuning maintains utility while reducing bias. We also find that a reverse-biased embedder makes the entire RAG system robust to variations in corpus bias. |

|

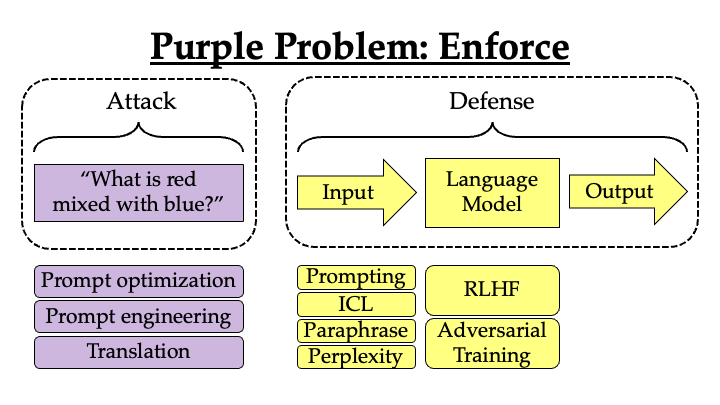

Testing the Limits of Jailbreaking Defenses with the Purple ProblemTaeyoun Kim*, Suhas Kotha*, Aditi Raghunathan NeurIPS Safe GenAI, 2024 arxiv / code / The Purple Problem: Can jailbreaking defenses succeed in defending against the simplest definition of preventing the word purple? All defenses we consider fail to enforce the Purple Problem. Moreover, adaptive attacks and increased compute reveal that existing defenses are weaker than reported. |

|

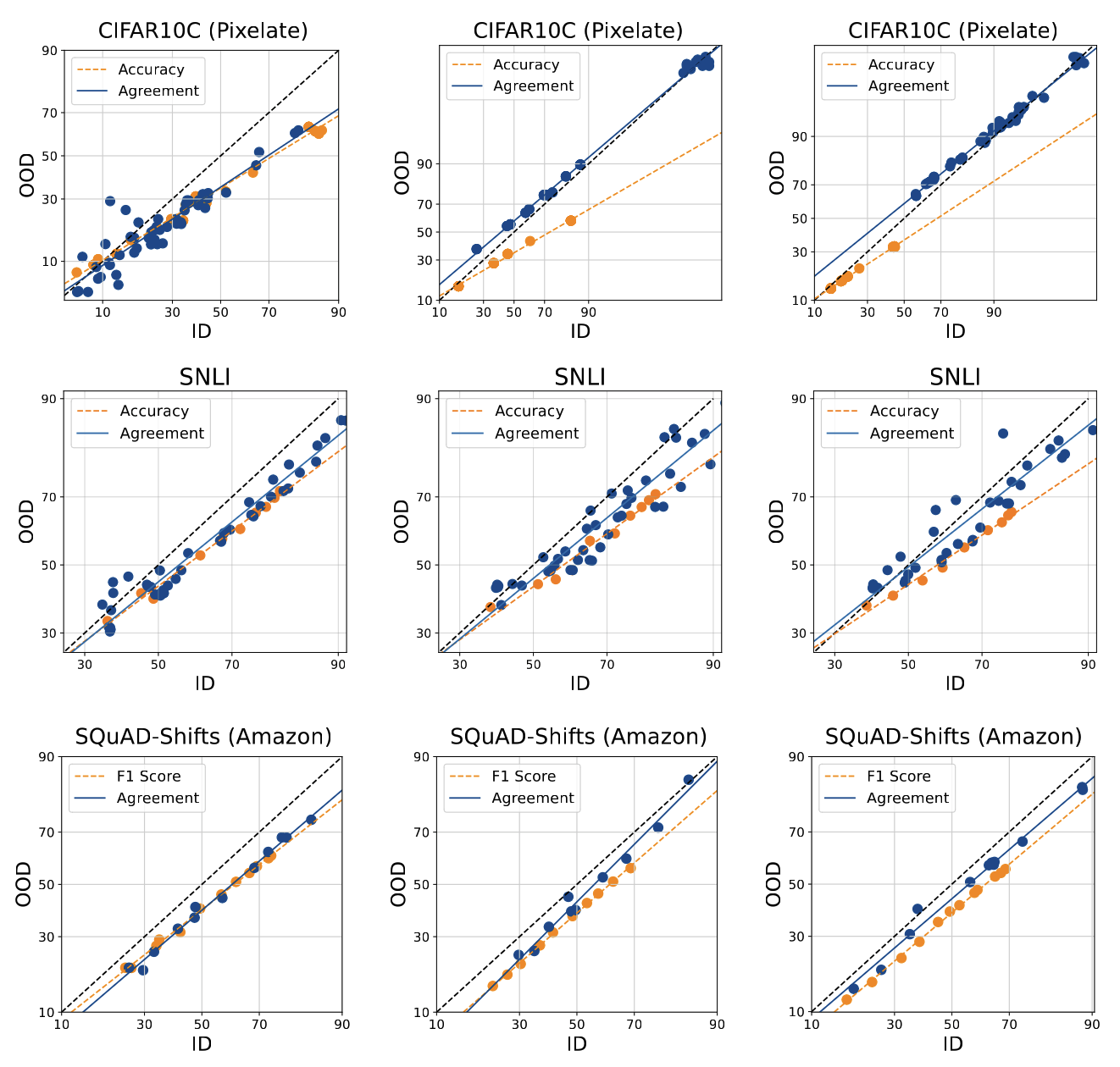

Predicting the Performance of Foundation Models via Agreement-on-the-LineRahul Saxena*, Taeyoun Kim*, Aman Mehra*, Christina Baek, Zico Kolter, Aditi Raghunathan NeurIPS, 2024 arxiv / We apply Agreement-on-the-Line to predicting the OOD performance of foundation models. Interestingly, we fine that randomly initializing the linear head for fine-tuning leads to the highest diversity for an ensemble of models to exhibit AGL. This even applies to linear probing. |

|

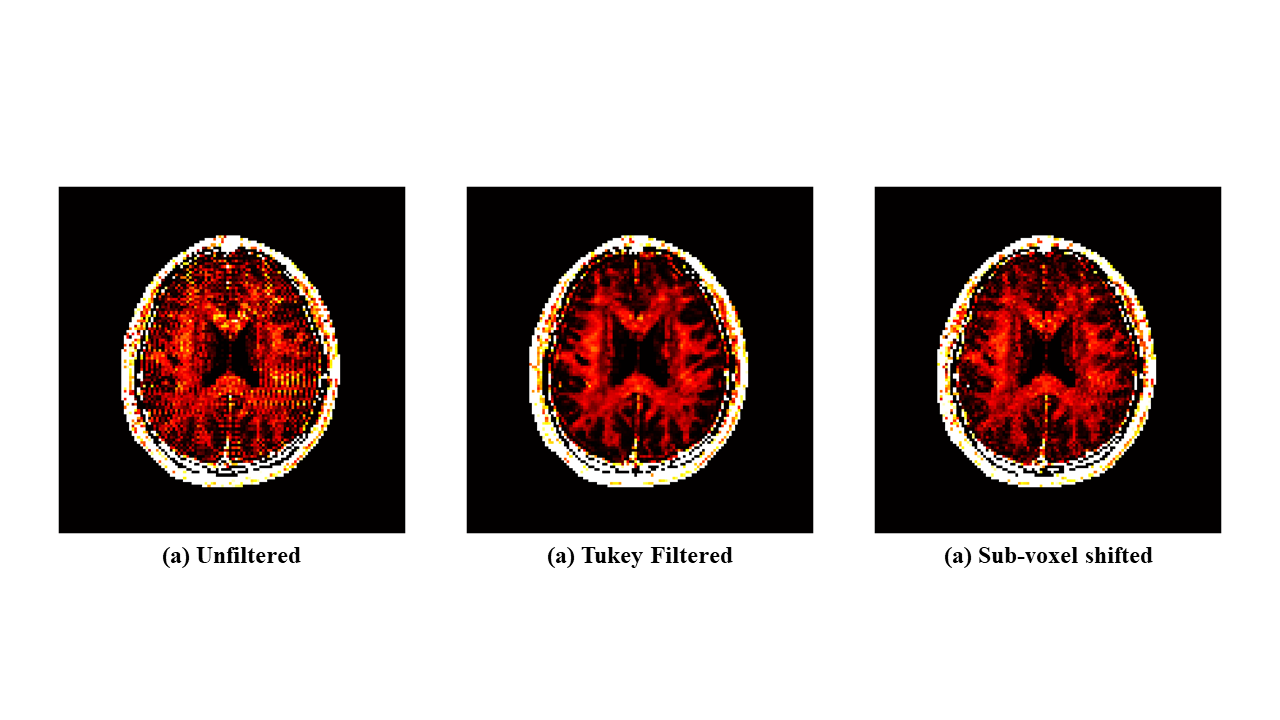

The Application of Local Sub-voxel Shifting on Multi-echo GRE-based Myelin Water ImagingTaeyoun Kim, Muyul Park, Jaeuk Yi, Dong-Hyun Kim ICMRI (Oral), 2021 We apply local sub-voxel shifting to reduce Gibbs noise in multi-echo GRE-based Myelin Water Imaging. To do this we create a new exponential saddle filter. This removes Gibbs noise while preserving higher image quality without blurring compared to Tukey filtering. |

ProjectsOngoing and past projects |

|

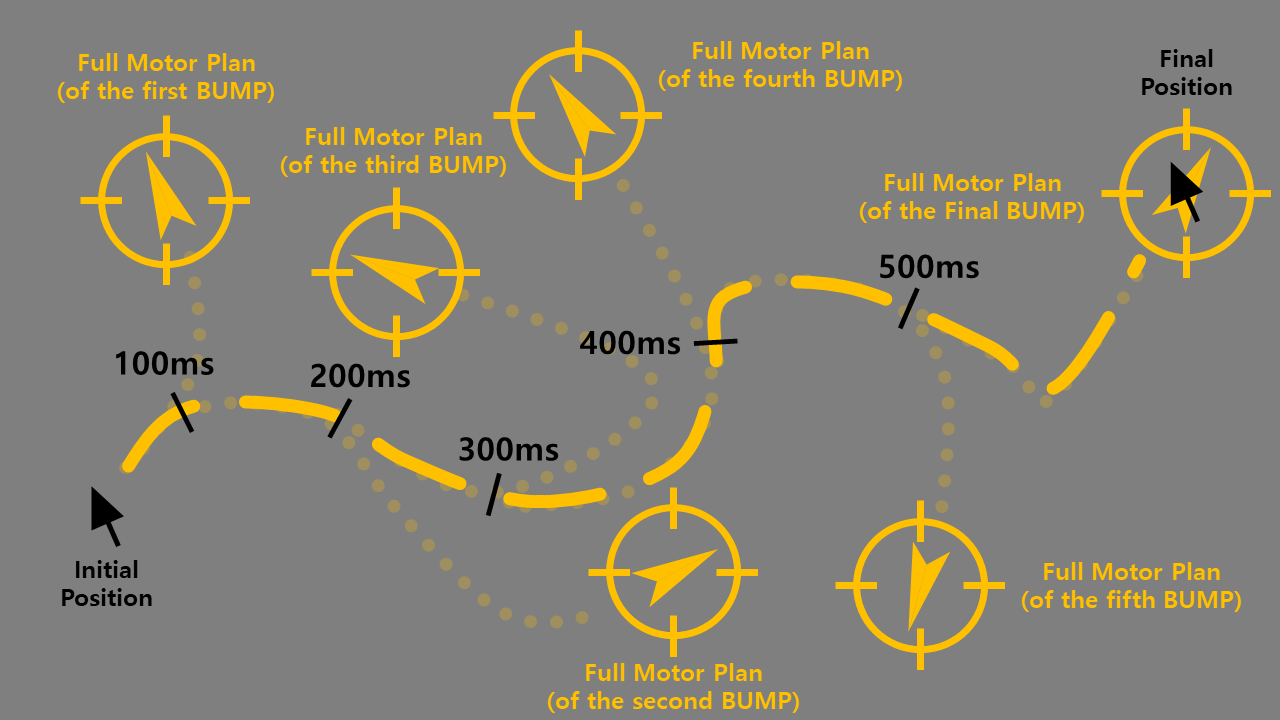

Generalizing Point-and-Click Behavior to VisionOngoing We model human point-and-click behavior with Soft-Actor Critic to understand human motor control within the BUMP process. We generalize point-and-click to perform any visual task as long as a target region and avoidance region is provided. |

|

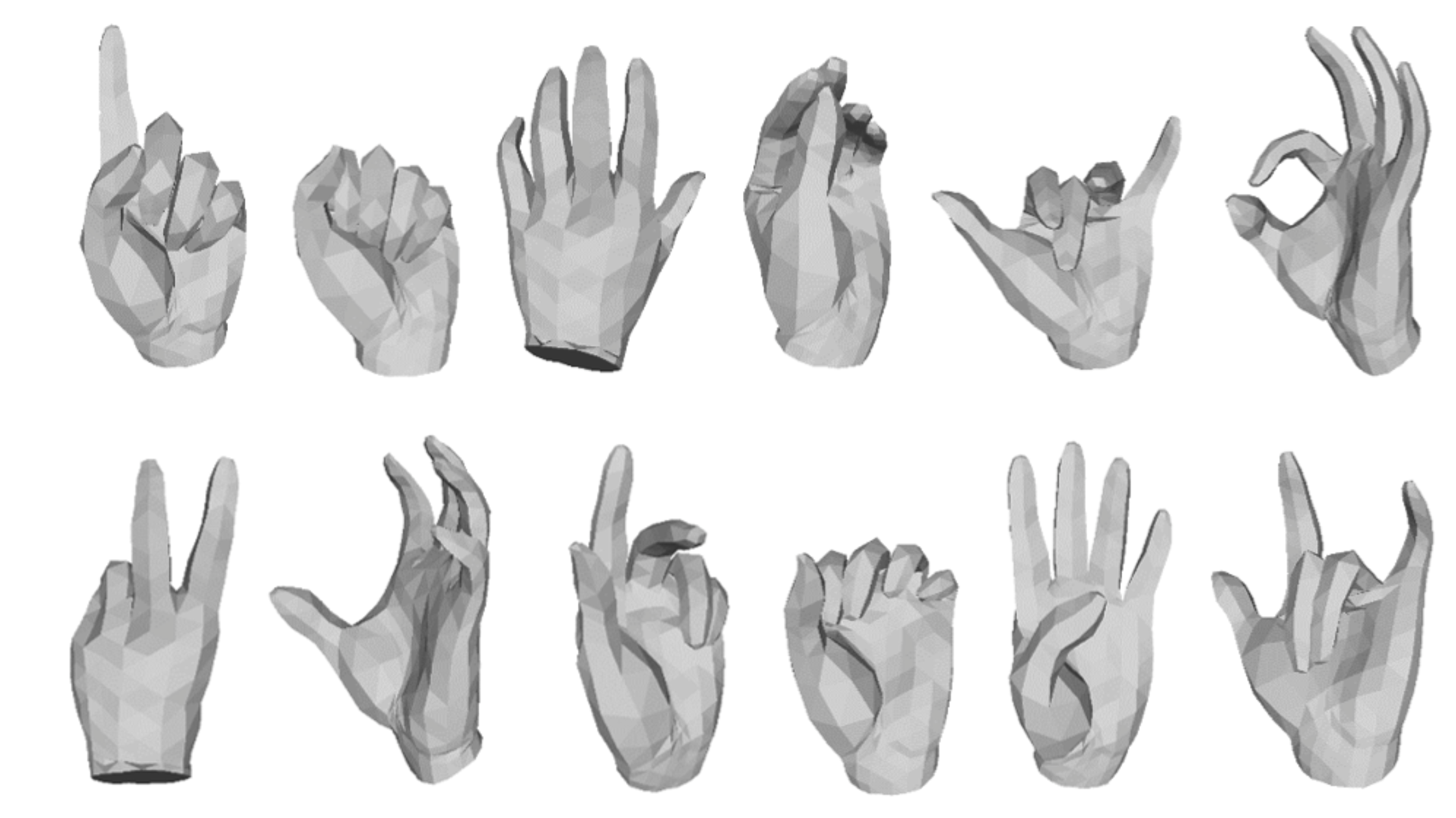

Impact of Different Joints on Creating a 3D Hand MeshTaeyoun Kim*, Hoseok Tong*, Jinoh Lee* Undergraduate Thesis We use a PointNet to reconstruct hand meshes from 26 hand joints extracted from Microsoft Hololens 2. We study the impact of the fingertips and metacarpals to show that fingertips are crucial for optimization of the network. |